Skills Proficiency Intelligence

A strategic imperative for workforce agility, operational rigor, and transformation readiness

By filling up this form, you agree to allow Draup to share this data with our affiliates, subsidiaries and third parties

Enterprises are being asked to move faster than their skills systems can keep up. AI adoption, cloud migration, and sustainability transitions are shifting what good looks like in critical roles—often faster than traditional assessment cycles can detect or correct.

At the same time, external research underscores the scale of the challenge:

- 85% of business leaders agreed that the need for skills development will dramatically increase due to AI and digital trends in the next three years. - Gartner

- 87% of companies worldwide say they either already have a skills gap or expect to within a few years. - McKinsey

- The average half-life of skills is now less than five years—and half that in some tech fields. - BCG

- Forrester notes that 9 in 10 organizations have a dedicated budget for digital initiatives, yet only about 50% of digital teams have the recommended digital competencies.

- Bain reports that 44% of executives say a lack of in-house expertise is slowing AI adoption, while AI-related job postings have grown 21% annually since 2019.

- BlackRock’s Investment Stewardship team frames human capital management as a factor in business continuity, innovation, and long-term financial value creation.

The implication is clear: skills strategy must evolve from inventory to proficiency—from Do we have the skill? to How strong is the skill, in the context that matters?

Executive insight: why skills proficiency changes the game

Most enterprises can produce a skills list. Far fewer can answer, with confidence and granularity:

- What level of proficiency is required for a specific role, in a specific function, in a specific region?

- How does our workforce’s demonstrated proficiency compare—today, not last year?

- Where are we over-invested, under-invested, or misallocated?

We built Skills Proficiency Intelligence to answer those questions with enterprise-grade precision.

At Draup, we go beyond tagging. We have developed a quantitative, data-rich model that evaluates skill proficiency at the individual, role, and enterprise levels - eliminating subjectivity.

This model is designed to replace best guesses with measurable, comparable evidence—so decisions about hiring, reskilling, redeployment, and mobility can be made with operational rigor.

The Crisis of Confidence: Why Traditional Skills Systems are Failing

Many skills programs underperform not because the intent is wrong, but because the measurement foundation is weak. Traditional approaches tend to break in four predictable ways:

- Cost and Time Intensiveness: Formal assessments and third-party evaluations are expensive, hard to scale, and often outdated by the time they are completed.

- Self-Reported Bias: Employees tend to overestimate their capabilities.

- Subjective Manager Ratings: These are highly contextual and inconsistent.

- Superficial / Static Tagging: Both resumes and job descriptions (JDs) frequently list broad skills without conveying depth or context.

The strategic risk is not merely incomplete data—it’s false confidence. A Python tag, for example, can mask materially different levels and contexts of capability across roles, teams, and regions.

From Inventory to Impact: The Draup Skills Proficiency Model

At Draup, we have built a fundamentally different approach - one that is external-facing, data-rich, and contextualized to business needs. Our Skills Proficiency Intelligence model eliminates subjectivity and provides enterprise leaders with a scalable, comparative, and actionable view of workforce capabilities.

The model has three integrated components:

1. Demand-Side Precision: Quantifying What the Business Requires

Quantify what proficiency the business actually requires.

We analyze thousands of job descriptions and not just corporate strategy statements to understand the real skill-depth companies need. This provides a granular, tactical view of required skill levels - by role, by function, and by region.

This matters because demand is rarely uniform across the enterprise. The same skill label can mean different depth and application depending on role design, stack choices, operating model, and region. Our approach anchors demand in real market signals embedded in job descriptions—not broad, generalized intent statements.

2. Supply-Side Intelligence: Inferring Proficiency from Objective Signals

Measure what proficiency the workforce is likely demonstrating—not just claiming.

We infer workforce skills from external signals - resumes, patents, publications, and digital footprints - then apply a multi-factor model to score proficiency

Our multi-factor model incorporates:

- Frequency and recency of use

- Context of usage (projects, industries)

- Peer benchmarking

- Thought leadership (e.g., publications)

- Endorsements and credibility indicators

The result is a much more objective, nuanced, and differentiated view of talent capability across the enterprise.

3. Gap Visibility: Mapping the Delta Between Reality and Strategy

Operationalize the delta between what you need and what you have.

By comparing the required proficiency (demand) and existing proficiency (supply), generate a clear view of skill gap across the Enterprise. And we don’t stop at a single roll-up view. This can be filtered across levels - individual, team, role-skill combinations…

Typical outputs include:

- Role-skill level mismatches

- Peer benchmarking by function and geography

- Insights filtered at individual, team, or business unit level

Most importantly, proficiency is contextual. Instead of tagging both a developer and a data scientist as having ‘Python,’ our model distinguishes between the level and context of python proficiency required - and demonstrated - so you can take targeted action on hiring, upskilling, or internal mobility.

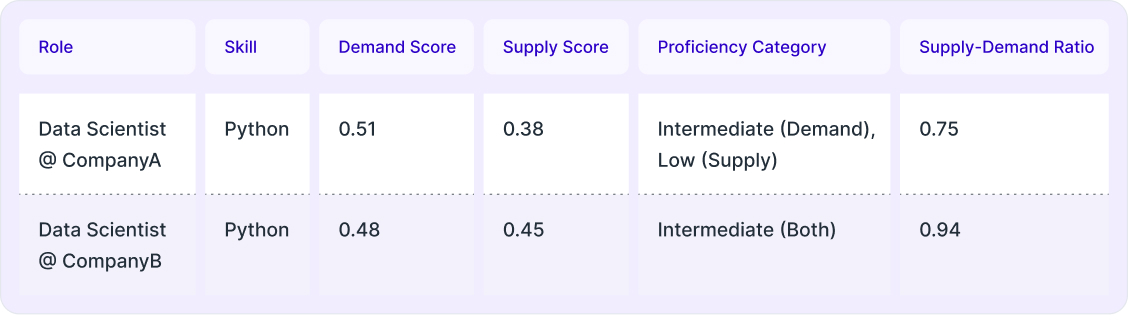

What the output looks like

Here is an illustrative example of how we express proficiency at the role-skill level:

In the sample above, the Supply-Demand Ratio aligns with a simple read: supply divided by demand (e.g., 0.38 / 0.51 ≈ 0.75). This provides a rapid indicator of under-supply vs. demand at the role-skill level.

A 6-Step Operating Framework for Proficiency-Led Strategy

How to move from assessment to workforce action

This is the operating cadence we recommend for enterprises building a proficiency-led skills strategy.

Step 1: Anchor on transformation priorities

If the enterprise strategy is AI-enabled productivity, cloud modernization, or data-driven decisioning, then skills cannot be treated as a generic HR dataset. Skills become a strategic constraint—or a strategic asset.

External research reinforces that transformation intensity is rising:

- Bain notes 97% of surveyed IT executives reported some level of generative AI testing, yet slightly less than 40% said they had scaled it across the organization

- Gartner reports leaders are concerned learning is too slow for the volume, variety and velocity of skills needs in an AI-fueled environment.

Action: Identify the transformation initiatives where workforce proficiency is a hard dependency (e.g., cloud data platforms, GenAI engineering enablement, cybersecurity modernization).

Step 2: Define demand-side proficiency by role, function, and region

This is where many skills-based programs flatten the truth. Our model explicitly avoids that. We analyze thousands of job descriptions to provide a granular, tactical view of required skill levels - by role, by function, and by region.

Action: Prioritize a set of critical roles and identify the skill clusters that are most determinative of success.

Step 3: Establish supply-side proficiency using objective signals

We use external signals and a multi-factor model to score proficiency:

Resumes, patents, publications, and digital footprints… [with] frequency and recency… context… peer benchmarking… thought leadership… endorsements…

Action: Build an evidence-based baseline of current skill depth, not only skill presence.

Step 4: Quantify gaps with role-skill granularity

The objective is not a heatmap for its own sake. It is a decision system.

By comparing… demand and… supply, [we] generate a clear view of skill gap… filtered across… individual, team, [and] role-skill combinations…

Action: Use the gap view to identify:

- The highest-risk roles where demand exceeds supply

- Regions or teams with emerging constraints

- Adjacent talent pools where mobility is feasible

Step 5: Precision-Targeted Interventions

Not every gap should be solved the same way. External research suggests enterprises often agree on the right lever—yet underinvest.

McKinsey found 80% of leaders in a recent survey said upskilling is the most effective way to reduce skills gaps, but only 28% were planning to invest in upskilling programs over the next two to three years.

Action: Use proficiency gaps to focus L&D where it is most likely to shift outcomes, and use hiring where supply constraints are structural.

Step 6: Monitor proficiency as a living system

Proficiency is not a one-time snapshot. It is a dynamic variable—especially as the half-life of skills shrinks.

As the half-life of skills shrinks and transformation cycles accelerate, enterprises need to shift from asking ‘Do we have this skill?’ to ‘How strong is this skill - and where does it fall short?’

Strategic Playbook: Proficiency Intelligence in Practice

We consistently see three high-leverage application patterns:

Role-specific targeting

Identify whether python, scala, and SQL skills in the team are strong enough for cloud and data initiatives.

Best fit: Cloud modernization, data platform buildouts, analytics transformation, engineering productivity initiatives.

Outcome: You move from we have Python to Python proficiency is adequate for the role context that matters.

Functional Benchmarking for Rapid Toolchain Evolution

Compare marketing tech proficiency across internal teams…

Best fit: Functions where toolchains evolve quickly and proficiency differences meaningfully affect outcomes (e.g., marketing operations, analytics, product engineering).

Outcome: You identify internal variance and prioritize enablement where it will improve execution—not just where training demand is loudest.

Region-specific gap visibility

Discover that New York teams are ‘Intermediate’ in Python, while Bangalore is ‘High’ - guiding L&D investment.

Best fit: Global delivery models, distributed engineering, hub strategies, capacity rebalancing.

Outcome: You deploy investment with geographic precision and reduce transformation drag caused by uneven capability distribution.

Strategic outcomes we enable

Skills proficiency becomes most valuable when it is explicitly connected to business outcomes. Our model is built to support:

- Strategic Workforce Planning: Align hiring, upskilling, and redeployment to business priorities.

- Future-Proofing Talent: Identify gaps early in emerging skill areas.

- Role Readiness & Mobility: Match people to future roles using hard data, not subjective reviews.

- Competitive Advantage: Benchmark against peers with an unbiased, scalable model.

- Operational Rigor: Replace perception with evidence in critical workforce decisions.

.svg)

.svg)

.svg)