Draup Data Now Available via the Model Context Protocol (MCP)

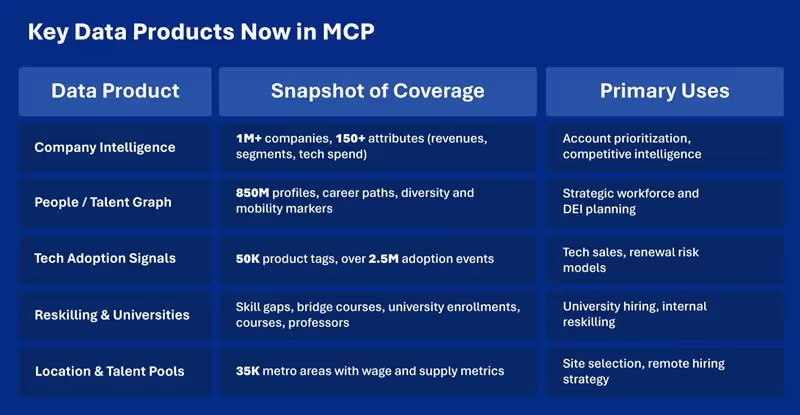

Draup’s entire sales, talent, and market intelligence graph is now available through the Model Context Protocol (MCP), an open standard that enables large language model (LLM) applications to access structured, governed context on demand. By exposing every Draup dataset as an MCP-compliant server, developers can feed real-time company, people, and technology signals directly into their generative AI workflows without the need to juggle custom APIs or brittle CSV pipelines. The result is richer prompts, faster retrieval-augmented generation (RAG), and radically simplified governance across the AI stack.

MCP Integration Brings Draup Datasets Directly to Your Workflows

1. What Makes Draup Data Valuable?

Draup aggregates multidimensional global labor and market data covering over 850 million professional profiles and more than a million companies, all enriched with firmographics, tech stack telemetry, and funding events. Its curated sales module surfaces early expansion signals, such as new R&D centers or strategic hires, so revenue teams can act quickly. The talent module tracks deep skills data to help HR leaders plan, hire, and reskill at scale. Independent reviewers highlight Draup’s depth of account insight and talent analytics, praising its impact on hyper-targeted prospecting and workforce planning.

2. A Primer on MCP (Model Context Protocol)

MCP is an open protocol introduced by Anthropic in November 2024 that standardizes how LLMs fetch external context. Think of MCP as the “USB-C port for AI”, a single, typed interface through which models can query any compliant data source. The specification defines:

- Context Servers: These expose data via JSON or Arrow blocks, plus metadata for authentication, schema, and rate limits.

- Context Clients: LLM applications that request snippets (such as rows, documents, or embeddings) at inference time.

- Security and Governance Hooks: Token-bound scopes, row-level filters, and audit endpoints.

Microsoft, OpenAI, and major cloud vendors now demonstrate MCP connectors as the future of RAG and tool use in enterprise AI.

3. How the Draup-MCP Integration Works

- Dataset Mapping: Every Draup table is registered as an mcp.dataset object with rich schema and freshness metadata.

- Streaming Change Data Capture (CDC): Draup pushes daily updates, such as new hires, funding rounds, or tech install changes, so LLMs can reason on the latest state without reindexing.

- Typed Query Endpoints: Developers issue SELECT-style filters, such as company_id, round_type, or skill_tag, and receive compressed Arrow batches optimized for vector stores.

- Governed Access: Role-based and attribute-based policies inherited from MCP ensure PII masking and that entitlements match enterprise standards.

Because MCP clients pull context on demand, your application retrieves only the 2 KB needed for a prompt, instead of shipping gigabyte extracts downstream.

4. Getting Started in Under 2 Minutes

- Go to your MCP Client's settings where you configure MCP Servers (this varies by client)

- Register a new MCP Server. Add a configuration block for "Draup" MCP

{

"mcp": {

"inputs": [],

"servers": {

"draup-mcp-server": {

"url": "DRAUP_MCP_ENDPOINT",

"headers": {

"Authorization": "Bearer <BEARER_TOKEN>”

} } } } }

Contact Draup to get DRAUP_MCP_ENDPOINT and BEARER_TOKEN for quickstart.

- Save the configuration and restart or refresh your MCP client application.

- You should see a success message and tools will be listed.

- Your MCP client should now be connected to the Draup Remote MCP Server and ready to use.

5. Example: Hyper-Personalized Sales Email in 30 Lines

Sample Request:

Find all VP of sales from small companies who raised Series A recently

POST /<DRAUP_MCP_ENDPOINT>/

{

"method": "tools/call",

"params": {

"name": "<TOOL_NAME>",

"arguments": {

}

}

}

Prompt Template:

You are an SDR. Draft an opening email referencing {{company_name}}’s recent {{round_type}} round and the hiring of {{new_vp_sales}}.

With MCP, the LLM receives factual context at generation time. There is no need for manual ETL or brittle scraping.

6. Early Results from Beta Users

- A global SaaS vendor cut 40 hours per month of manual data preparation by switching its RAG pipeline from custom APIs to MCP-based Draup feeds, while seeing a 2× increase in first-call meeting rates.

- An HR analytics team integrated Draup’s diversity metrics into its skills-gap copilot, reducing median query latency from 1.8 seconds to 220 milliseconds thanks to on-demand context.

Ready to Explore?

Email support@draup.com for a sandbox key. In just a few minutes, you can be streaming Draup’s market-moving intelligence directly into your GenAI applications, powered by the Model Context Protocol.

.svg)

.svg)

.svg)

.svg)